As a part of the series of discussions about synchronising RM and RSM storage, I attempt to describe how writes to RSM storage could be governed and how shielded this schema can be. What I want to describe:

- storage permission application (SPA) design

- how SPA can be used by other applications to write to RSM storage

- how shielded SPA mechanism can be (both SPA resources and guarantees for other application that use SPA)

This part only covers the first part and hints at the second part.

Assumptions/wants:

-

writes/deletes are governed by resource logics

-

these logics specify the path to the blobs they govern (implies no function privacy for such logics)

-

applications (both shielded and transparent) want to write to the blob storage and have some sort of access control

-

blobs to be written are included in the transaction and are validated by the responsible logic

These assumptions are treated like axioms - we don’t question how true they are, just base the proposal assuming that they correctly represent what we want.

The schema

Storage partitioning

Let’s assume that resource logics governing the writes (from now on: Storage Permission Logic - SPL) partition the blob storage with the criterion of the conditions on which anything can be written/deleted to that part of the storage. We assume these conditions are unique. We do it for simplicity of representation, it doesn’t have to be like that in practice.

So it would look (conceptually) like something like this:

Each SPL corresponds to a certain application. Let’s call any application of such type Storage Permission Application – SPA. Only SPA applications can govern RSM storage writes.

How SPA could work

There are multiple possible ways to arrange SPAs, I suggest two versions.

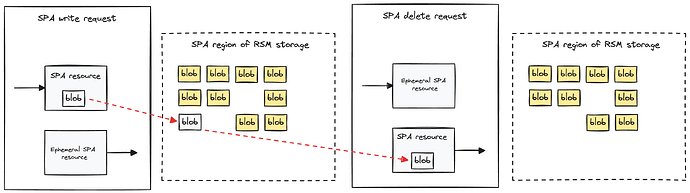

Simpler version: creation of a resource is associated to an action of blocking the relevant area in the storage. Consumption of a resource unblocks the area (can be written to again). So, create resource - write blob, consume resource - delete blob. Both transactions would have to be artificially balanced by ephemeral resources.

Less simple version: There is a storage application (SA) associated with RSM that creates resources for each SPA and stores them publicly (e.g., in the dedicated area of the RSM storage). Whoever wants to write to the area associated with a particular SPA, consumes the relevant resource. The transaction balances ephemerally, the ephemeral resource contains the blob to be written and an included deletion criterion.

Version 1

SPA logic:

- create:

- non-ephemeral: request the blob write, verify the blob, requesting party, other constraints. Can be balanced by both ephemeral resource and non-ephemeral resource (i.e., delete another blob at the same time)

- ephemeral: can only be created to balance non-ephemeral consumption

- consume:

- non-ephemeral: request the blob deletion, verify the requesting party and other constraints

- ephemeral: can only be created to balance non-ephemeral creation

Advantages: simple and allows for dynamic deletion

Disadvantages: degenerate application design

Version 2

SPA logic:

- create:

- non-ephemeral: permitted only for RSM storage providers (how to verify this? - dynamic set of entities), creates a ticket that can be consumed in order to store something in the SPA region

- ephemeral: verify there is a blob to be written and and a deletion criteria both of which satisfy the corresponding constraints (blob format, identity, etc)

- consume:

- non-ephemeral: permitted when the balancing ephemeral resource is valid

- ephemeral: balances creation of the non-ephemeral ticket

Advantages: more meaningful use of the resource model, the deletion criterion is specified in advance

Disadvantages: the deletion criterion cannot be specified dynamically, need to maintain an external (at least the abstraction of) data structure (ticket pool)

What should the write constraints include?

-

The blob is valid. The logic defines what it means for the blob to be valid: it might verify every field of the blob, some fields of the blob, or allow any blob to be written as long as other conditions are met.

-

The user is authorised to perform the write. The logic might allow only selected set of parties to perform writes to the area. This might be verified with a signature associated with the user’s long-term key. Another forms of verification are possible, but we will assume this one because it is the easiest to assume.

-

Other constraints. The logic might require additional conditions to be met, e.g., only permit a write at a specific time or only permit a write when some other resources are consumed. The latter option might also be used to implement the previous constraints.

How can SPA be used by other applications

All other applications do not do deal with blobs themselves, instead they bind their logics to SPA logics (similar to how intent applications work) and request storing a blob via triggering the corresponding SPA application logic.

For example, an application A wanting to store a blob B as a part of its logic requires a presence of the relevant SPA resource containing blob B in the same action. It must also check the correspondence between the relevant fields, e.g., the identity associated with the request, maybe the blob, etc. I will provide a more concrete example and a diagram in the next part

Consequences

- encapsulate storage operations in app logics of specific (essential) applications

- remove

deletion_criterionfield fromapp_data

Next posts: